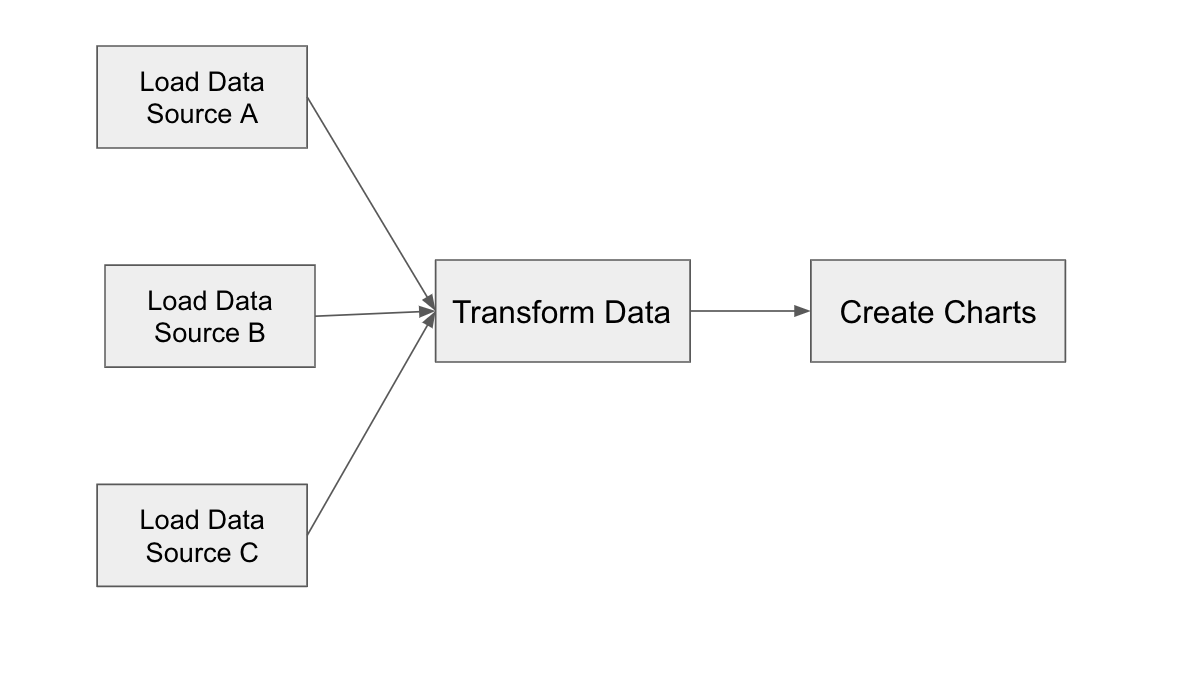

Kindly set the start_date to be the current date and the schedule_interval to None so that the DAG does not reschedule itself: import datetime `payment_expire_at`, `payed_at`, `cancel_flg`, `canceled_at`,ĭefine the dag object for the file. `lot_status`, `fixed_total_price`, `payment_status`, `receive_method_id`, `total_ticket_quantity`, `temp_lot_status`, `event_id`, `release_id`, `payment_method_id`, Here’s the SQL query: query = """SELECT `id`, `ordered_at`, `user_id`, Now the workflow graph should look like following:Ĭreate a DAG file named etl_single_dag.py at the dag_folder as seen in my previous blog: Apache Airflow & DAGs by examples. The updated workflow allowed the team to use predefined operators from the package, removing the need to test operators as those operators are official and have been well-tested before release. Google Cloud Storage to BigQuery (Load GCS to BQ) (Load).MySQL to Google Cloud Storage (Query + push result to GCS) (Extract/Transform).After researching, our team decided to compact the workflow to 2 steps: In Airflow, to build a workflow, suitable operators must be used and upstream/downstream relationships must be specified. Push the query result as a file to the pre-defined Google Cloud Storage bucket.Query meaningful columns from the table.

Here’s the screenshot of the order table.įrom the client’s demand, we can synchronize each meaningful table following a common series of tasks: The incremental load problem (daily synchronization) will be discussed in later blogs.

AIRFLOW ETL FULL

In this blog, firstly we deal with the full load problem, that is, to extract and load the whole table content to the data warehouse. The client wants the system to run on Airflow 1.10.x. For simplicity, the scenario here is limited to operations on one table only: The client wishes to synchronize data from selected columns from the table orders, first to Google Cloud Storage bucket then to BigQuery. Given a database FL_Business with several tables that is used for the business of the client company. Airflow is said to be built with ETL in mind, so it is a good candidate for ETL workflows of any scale. It is a crucial first part of the two parts featured in our LiMDa project (the second one being data refinement). It is the process of aggregating data from various source to a centralized data warehouse so that the data can be analyzed later on. In today’s blog, I will be guiding you through the process of creating a simple ETL workflow from local database to data warehouse, using Airflow as the backend.Įxtract – Transform – Load is a three-step process commonly seen in the context of data-centric projects.

AIRFLOW ETL HOW TO

In my last blog, I have given a quick guide on how to use Airflow in the most basic way.

0 kommentar(er)

0 kommentar(er)